winter quarter

Playing Frisbee with a cat at a park

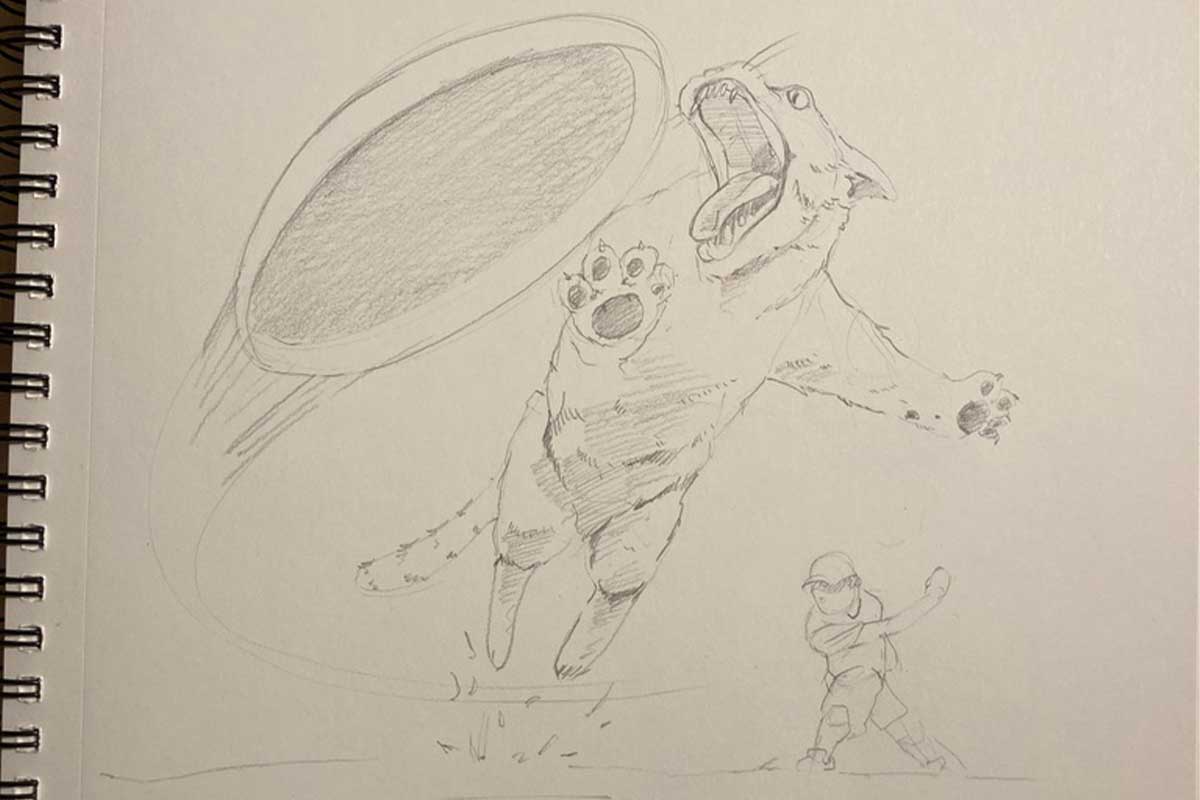

That was the one I chose to do. I imagined a low angle shot of a cat leaping up to catch the frisbee. I wanted to capture that sort of whiplashing motion that dogs sometimes do in mid air, and I thought that shape would be a good counter to the shape of the frisbee. I also imagined a person in the background whose pose reflects a just-hurled object. Here’s the sketch I did

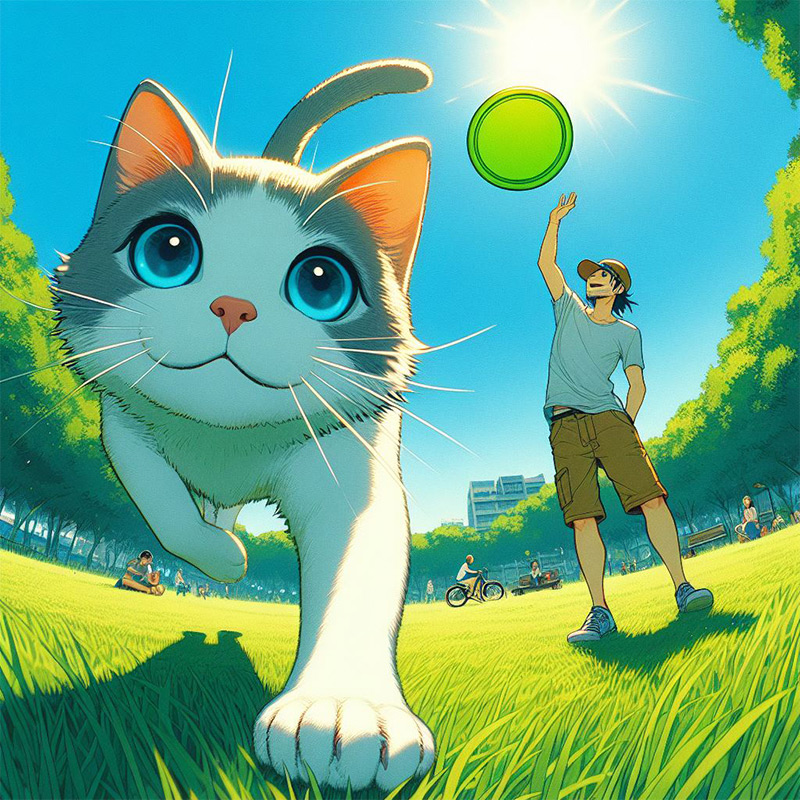

I used the Bing Image Creator (which I’m pretty sure is just Dalle 3) and my first prompt was

Cat catching a frisbee on a sunny day, man in the distance throwing; low angle

And here’s what I got:

Not bad except that the cat seems to have two left arms. My second prompt was a little more specific, and I wanted to change the style:

Man and cat playing frisbee in the park on a sunny day; low angle; in the style of 80’s anime; warm color palette

And here’s what that prompt returned:

My final prompt was the most specific of all:

A cat jumping up to catch a frisbee; man in the background who just threw the frisbee; low angle; cat heading towards the camera; sunny day in a park; in the style of 80’s anime; green color palette with blue accents

And this is probably the most engaging image:

I don’t have to point out the technical flaws in this picture. My experience with this image generator is basically the same as my experience with AI more broadly: curiosity followed by absurdity followed by boredom. But after last week’s speaker, I’m starting to come around to how useful AI could be in rendering the more tedious parts of an image-based project. Like let’s say you were designing a wall-paper in the style of William Morris. You could render individual flower bulbs, leaves, geckos or whatever, scan them, and then feed those to an AI saying, “make me a repeated pattern using only these elements on a maroon background; 6 inches by 12 feet.” Or maybe the New York Times commissions you to do an illustration and you think it would be cool to render an areal view of a busy New York street. You could just make and color the background in one file, make a few drawings of people and cars, and tell the AI to populate the street with people in the style of those you already drew. Or even better, the AI could render the building windows, which is always such an ungodly pain in the ass. There are possibilities.